20251212-103453

1

Slide 1

NotebookLM to PPTXTurn NotebookLM Slides into Editable PPTX

NotebookLM to PPTXTurn NotebookLM Slides into Editable PPTXExport PPTX

Log In

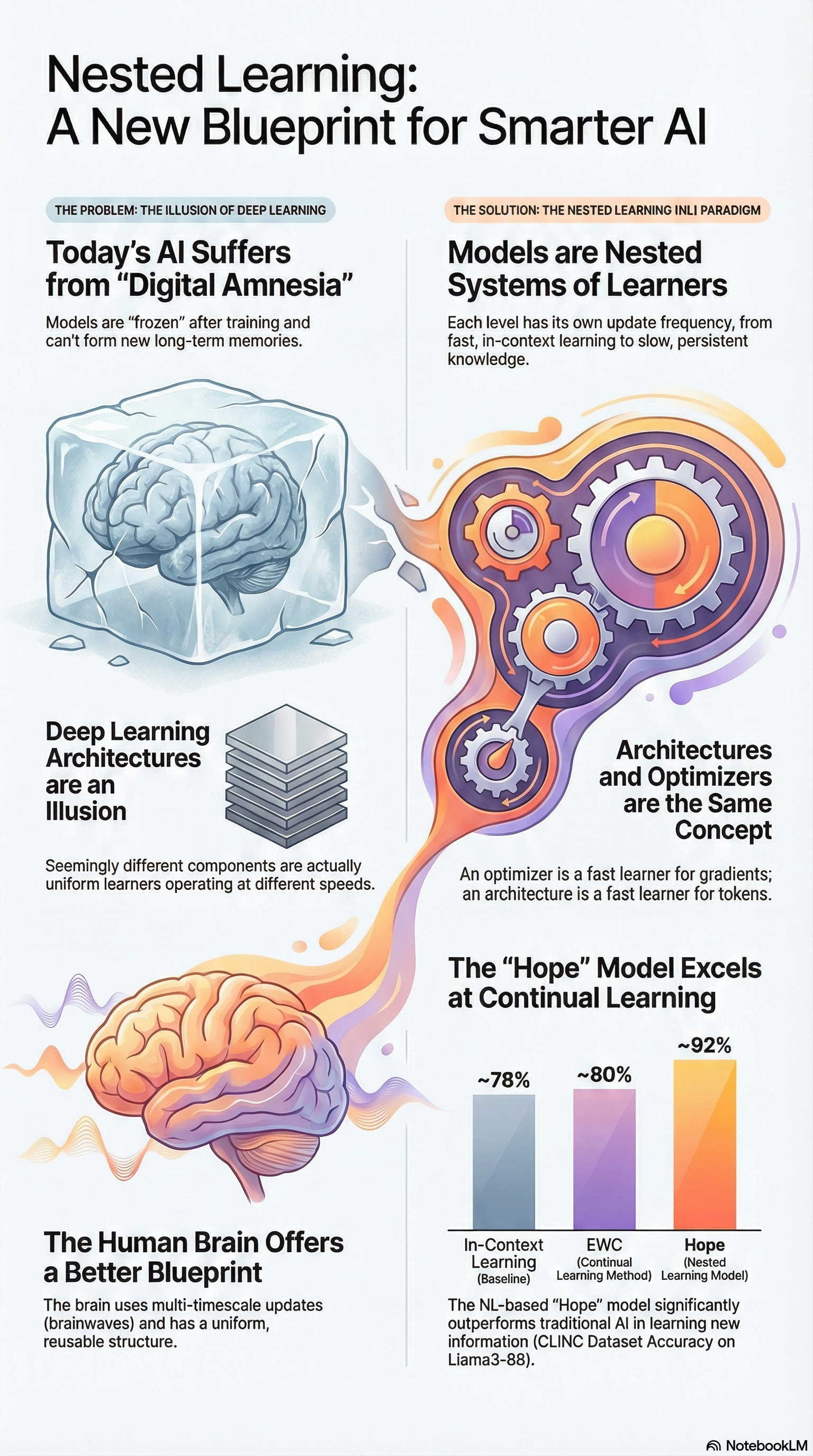

THE SOLUTION:THE NESTED LEARNING INLI PARADIGM

THE PROBLEM: THE ILLUSION OF DEEP LEARNING

The NL-based "Hope" model significantly outperforms traditional Al in learning new information (CLINC Dataset Accuracy on Liama3-88).

The brain uses multi-timescale updates (brainwaves) and has a uniform, reusable structure.

(Nested

Learning Model)

(Continual Learning Method)

Each level has its own update frequency, from fast, in-context learning to slow, persistent knowledge.

Models are "frozen" after training and can't form new long-term memories.

Models are Nested Systems of Learners

Today's Al Suffers from "Digital Amnesia"

Hope

EWC

In-Context Learning (Baseline)

The Human Brain Offers a Better Blueprint

~80%

~78%

~92%

An optimizer is a fast learner for gradients; an architecture is a fast learner for tokens.

The "Hope" Model Excels at Continual Learning

Seemingly different components are actually uniform learners operating at different speeds.

Architectures and Optimizers are the Same Concept

Deep Learning Architectures are an Illusion

Nested Learning: A New Blueprint for Smarter Al

NotebookLM