20251212-103449

1

Slide 1

NotebookLM to PPTXTurn NotebookLM Slides into Editable PPTX

NotebookLM to PPTXTurn NotebookLM Slides into Editable PPTXExport PPTX

Log In

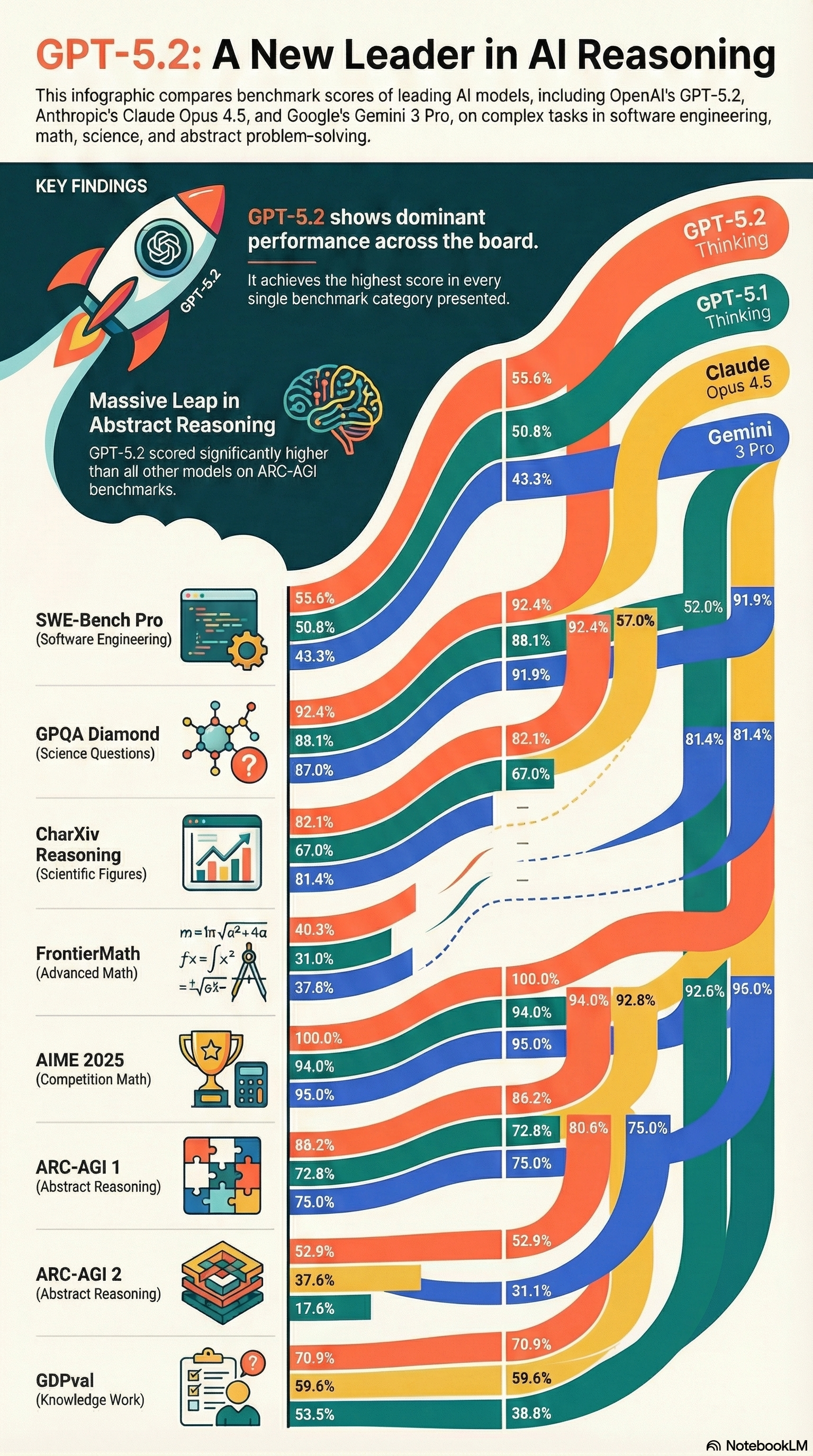

59.6%

70.9%

70.9%

31.1%

17.6%

37.6%

52.9%

52.9%

75.0%

75.0%

72.8%

75.0%

80.6%

72.8%

88.2%

86.2%

95.0%

94.0%

95.0%

100.0%

96.0%

92.6%

92.8%

94.0%

94.0%

100.0%

37.8%

31.0%

40.3%

81.4%

67.0%

82.1%

67.0%

87.0%

81.4%

81.4%

82.1%

88.1%

92.4%

91.9%

88.1%

43.3%

57.0%

92.4%

50.8%

91.9%

52.0%

92.4%

55.6%

43.3%

50.8%

55.6%

shows dominant

(Knowledge Work)

GDPval

(Abstract Reasoning)

ARC-AGI2

(Abstract Reasoning)

ARC-AGI1

(Competition Math)

AIME 2025

=t16%-

a2+4a

m=im

(Advanced Math)

FrontierMath

CharXiv Reasoning (Scientific Figures)

(Science Questions)

GPQA Diamond

(Software Engineering)

SWE-Bench Pro

Massive Leap in Abstract Reasoning

KEY FINDINGS

This infographic compares benchmark scores of leading Al models, including OpenAl's GPT-5.2, Anthropic's Claude Opus 4.5, and Google's Gemini 3 Pro, on complex tasks in software engineering, math, science, and abstract problem-solving.

A New Leader in Al Reasoning

GPT-5.2:

NotebookLM

38.8%

53.5%

59.6%

GPT-5.2 scored significantly higher than all other models on ARC-AGI benchmarks.

It achieves the highest score in every single benchmark category presented.

performance across the board.

GPT-5.2